|

I am a PhD candidate in Mechanical Engineering at the Biomimetics & Dexterous Manipulation Laboratory (BDML) at Stanford University, advised by Prof. Mark Cutkosky. During my master's program, I also worked at the Collaborative Haptics and Robotics in Medicine (CHARM) Lab, advised by Prof. Allison Okamura. I received my M.S. degree in Mechanical Engineering from Stanford University in 2025 and my B.Eng. degree in Robotics Engineering from Beijing University of Chemical Technology (BUCT) in 2023. I was a research assistant at The Chinese University of Hong Kong (CUHK) from 2022 to 2024, working with Prof. Jiewen Lai and Prof. Hongliang Ren. I care deeply about building a welcoming and inclusive research community. If you'd like to chat about research, career paths, or anything else, feel free to reach out—especially if you're from an underrepresented group in STEM. I'm always happy to connect and help where I can.

Email: tianao [at] stanford.edu

|

|

|

|

I am interested in medical robotics, tactile sensing, soft robotics, and sim-to-real applications. Most of my research focuses on sensing-driven and learning-based approaches for safe interaction and navigation in medical and clinical environments. |

|

Jiewen Lai*, Tian-Ao Ren*(Co-first author), Pengfei Ye, Yanjun Liu, Jingyao Sun, Hongliang Ren International Journal of Robotics Research (IJRR), 2025 Paper TL;DR: Embodying gravity sensation in soft slender robots with a minimalist setup. |

|

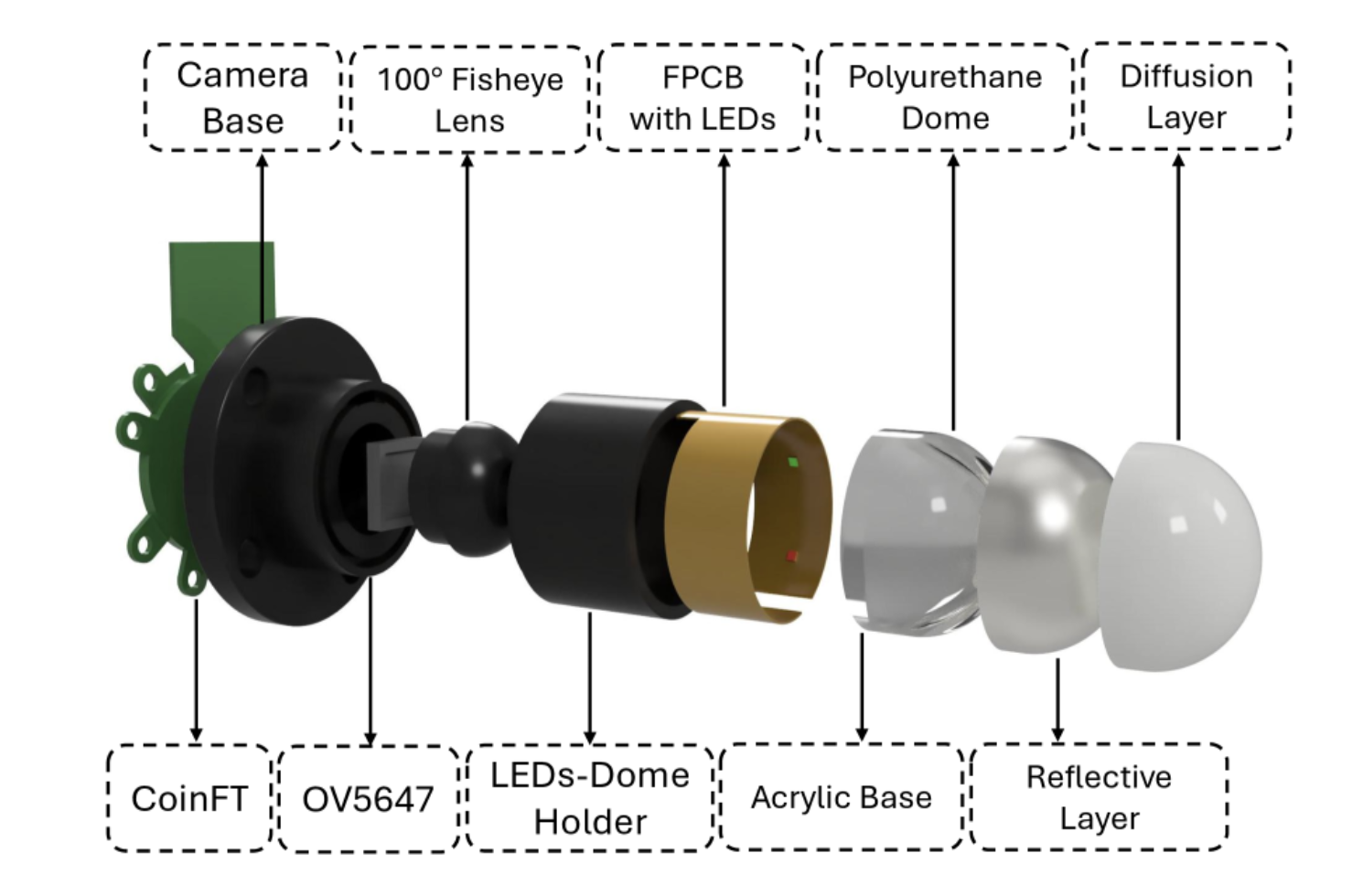

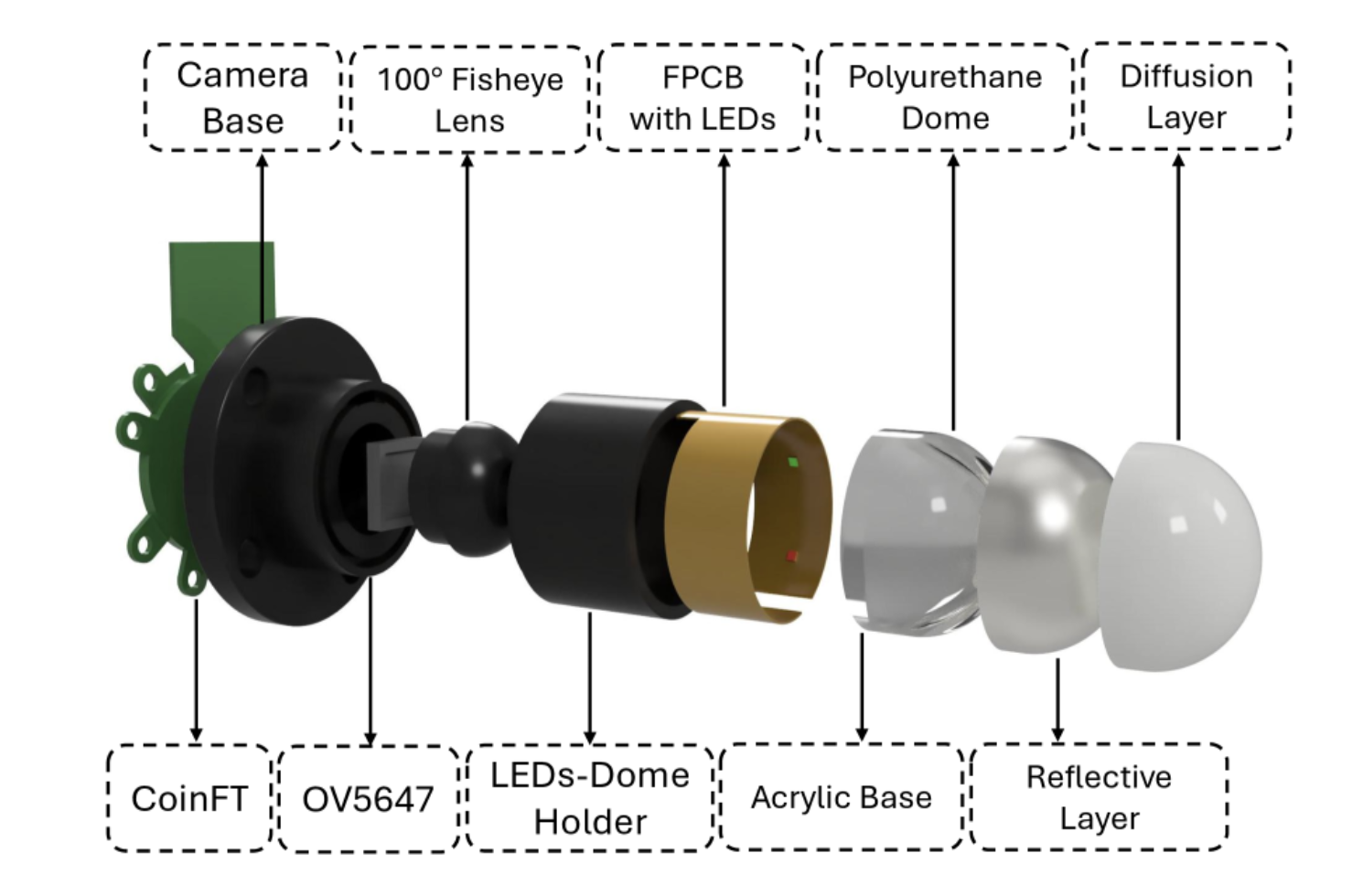

Tian-Ao Ren, Jorge Garcia, Seongheon Hong, Jared Grinberg, Hojung Choi, Julia Di, Hao Li, Dmitry Grinberg, Mark R. Cutkosky Design of Medical Device Conference (DMD), 2026 arXiv TL;DR: Combining vision-based tactile imaging with force-torque sensing enables robots to reliably detect subsurface tendon features during physiotherapy palpation, where force signals alone are often ambiguous, while still maintaining safe and controlled contact. |

|

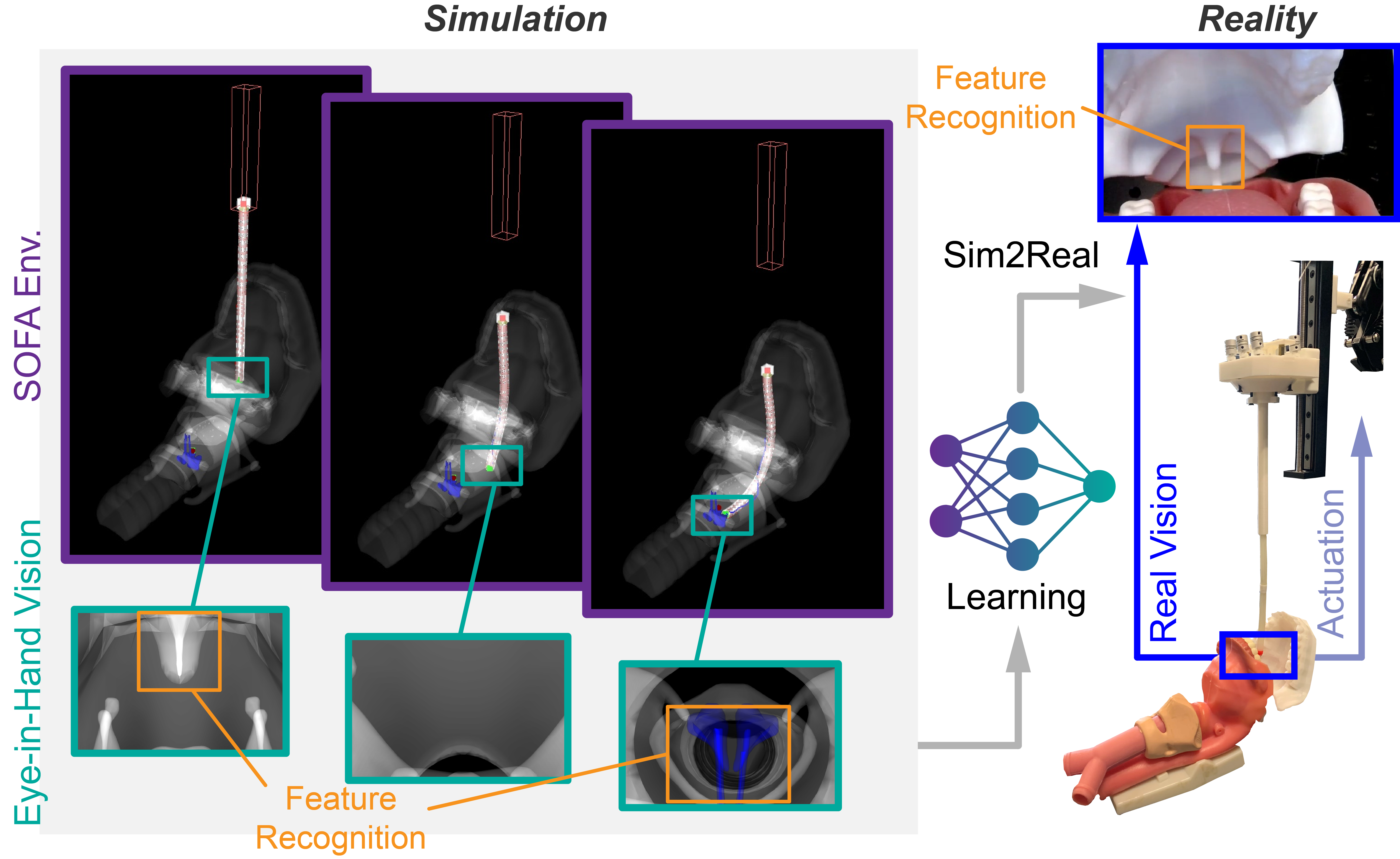

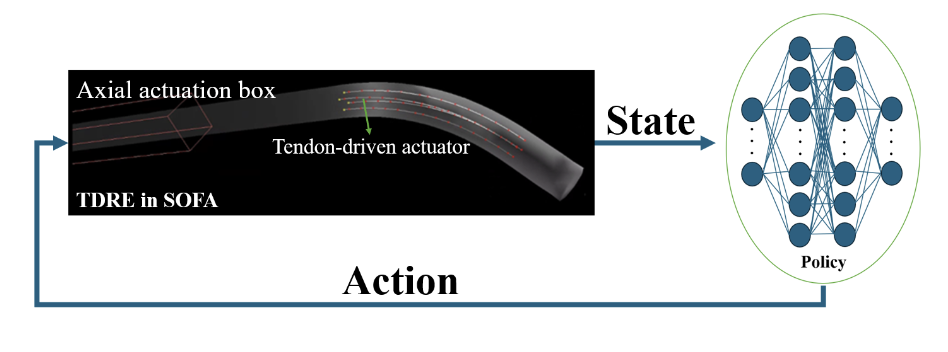

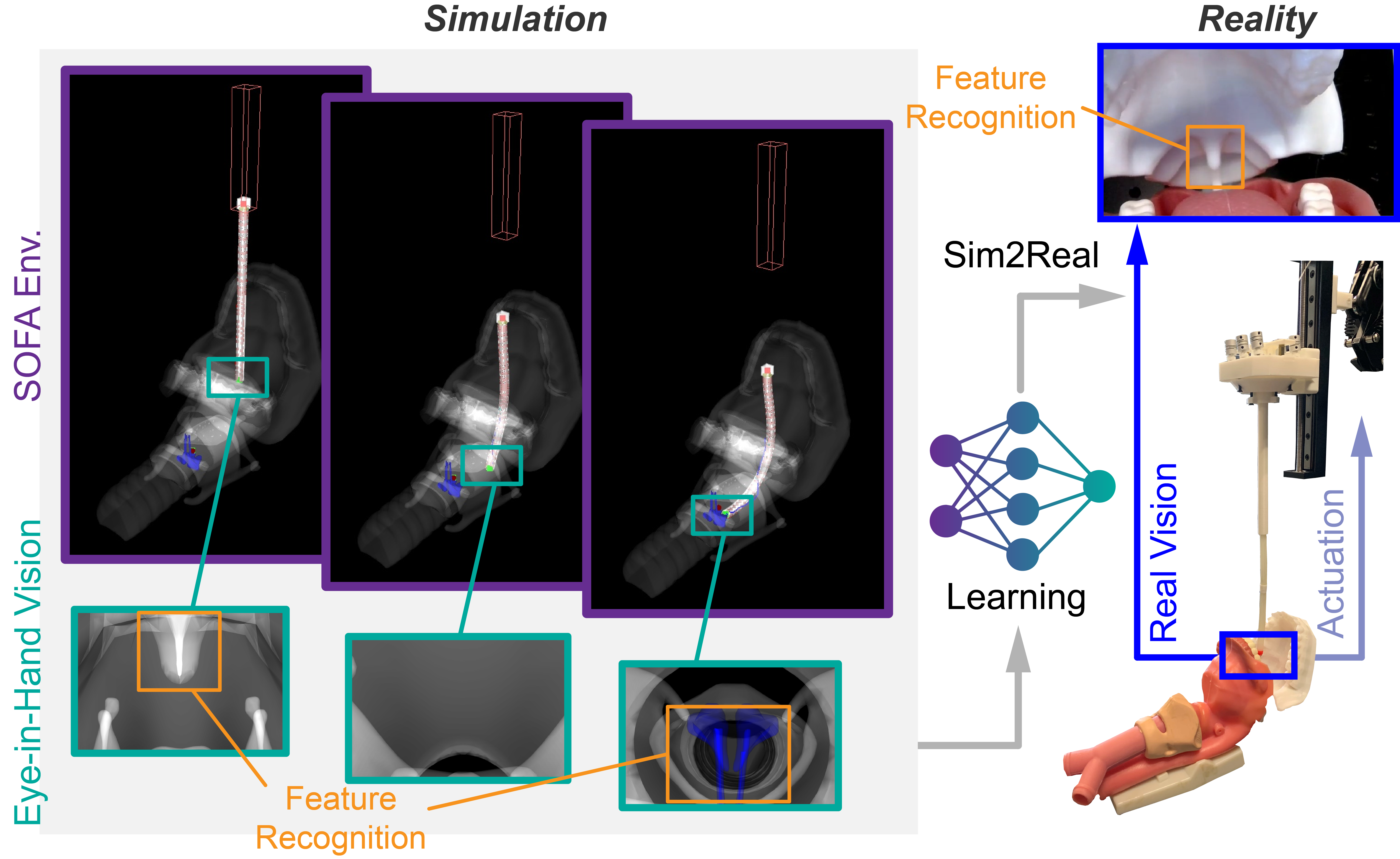

Jiewen Lai*, Tian-Ao Ren*(Co-first author), Wenchao Yue, Shijian Su, Jason Chan, Hongliang Ren IEEE Transaction on Industrial Informatics(T-II), 2023 Supply Video / Paper TL;DR: Transferring the navigation strategy that a redundant soft robot learns from what it has seen in the SOFA-based virtual world to the real world. |

|

Jiewen Lai*, Yanjun Liu*, Tian-Ao Ren, Yan Ma, Tao Zhang, Jeremy Teoh, Mark R. Cutkosky, Hongliang Ren Second Round review by Nature Communications, 2025

|

|

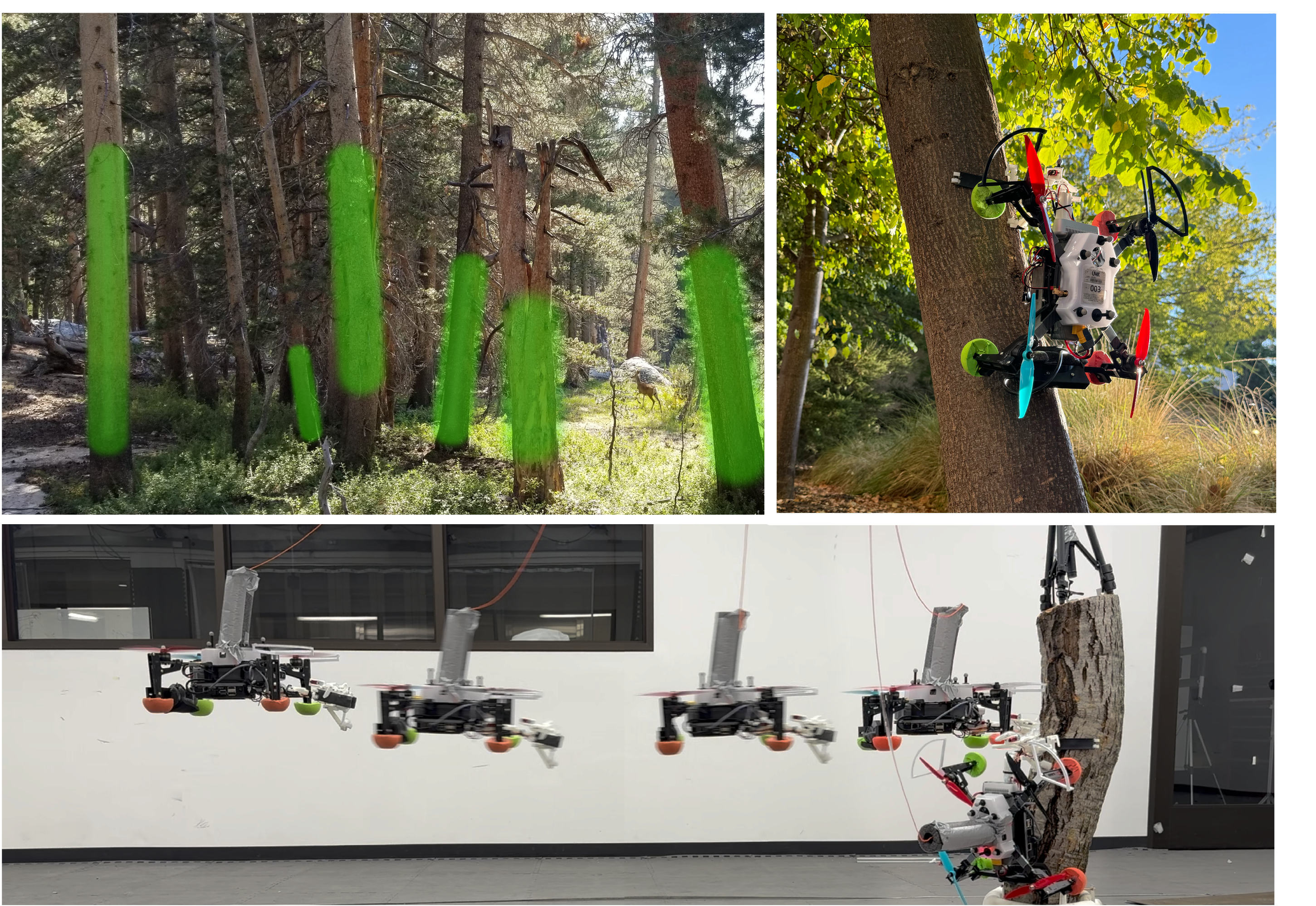

Julia Di, Kenneth A. W. Hoffmann, Tony G. Chen, Tian-Ao Ren, Mark R. Cutkosky IEEE Aerospace Conference 2026, 2026

|

|

Jiewen Lai, Tian-Ao Ren(Co-first author), Pengfei Ye, Yanjun Liu, Jingyao Sun, Hongliang Ren International Journal of Robotics Research (IJRR), 2025 Paper TL;DR: Embodying gravity sensation in soft slender robots with a minimalist setup. |

|

Tian-Ao Ren, Jorge Garcia, Seongheon Hong, Jared Grinberg, Hojung Choi, Julia Di, Hao Li, Dmitry Grinberg, Mark R. Cutkosky Design of Medical Device Conference (DMD), 2026 arXiv TL;DR: Combining vision-based tactile imaging with force–torque sensing enables robots to reliably detect subsurface tendon features during physiotherapy palpation, where force signals alone are often ambiguous, while still maintaining safe and controlled contact. |

|

Emj Rennich, Tian-Ao Ren, Jihyeon Kim, Julia Di, Tony G. Chen, Mark R. Cutkosky IEEE Transactions on Field Robotics (T-FR), 2025 TL;DR: Gecko-inspired dry adhesives enable gentle robotic grasping in extreme cold, fail below ~-60 °C, and can be reliably restored at much lower temperatures using brief local heating and appropriate preload. |

|

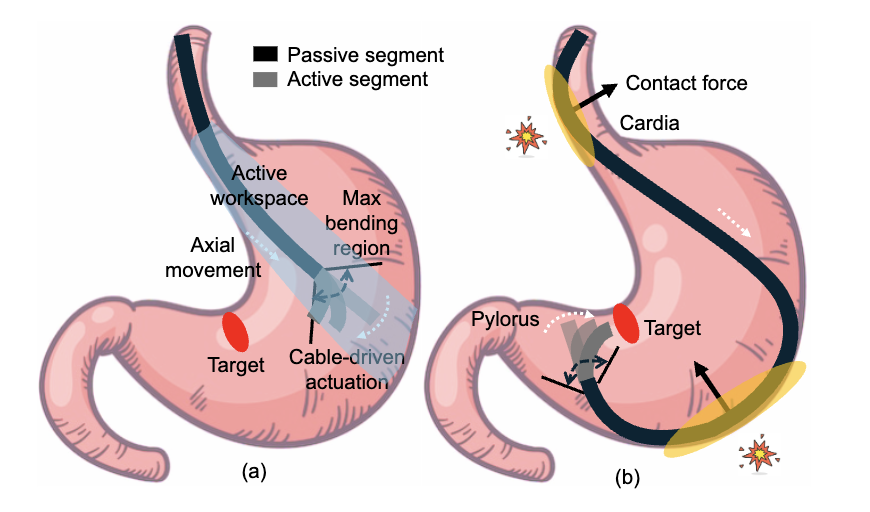

Chikit Ng, Huxin Gao, Tian-Ao Ren, Jiewen Lai, Hongliang Ren IEEE International Conference on Robotics and Biomimetics (ROBIO), 2025 Best Paper Award TL;DR: A force-informed deep reinforcement learning strategy enables flexible robotic endoscopes to exploit contact with deformable stomach walls for robust, high-precision navigation in dynamic environments, significantly outperforming contact-agnostic policies and generalizing to unseen disturbances. |

|

Chengyi Xing*, Hao Li*, Yi-Lin Wei, Tian-Ao Ren, Tianyu Tu, Yuhao Lin, Elizabeth Schumann, Wei-Shi Zheng, Mark Cutkosky Intelligent Robots and Systems (IROS), Accepted project page / arXiv We present the design of FBG-based wearable tactile sensors capable of transferring tactile data collected by human hands to robotic hands. |

|

|

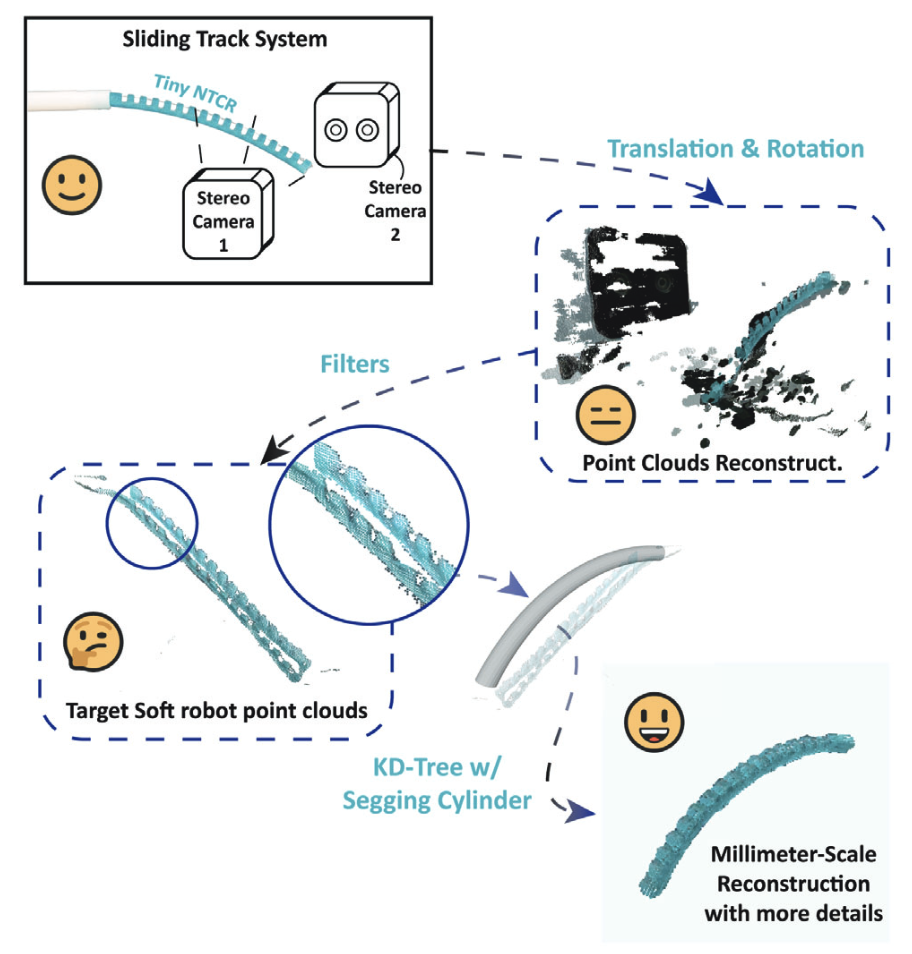

Tian-Ao Ren, Wenyan Liu, Tao Zhang, Lei Zhao, Hongliang Ren, Jiewen Lai IEEE International Conference on Robotics and Biomimetics (ROBIO), 2024 Paper TL;DR: A dual stereo vision system with geometry-guided point-cloud relocation enables accurate 3D morphological reconstruction of millimeter-scale soft continuum robots, recovering fine notch-level details despite low-resolution depth sensing. |

|

Chikit Ng, Huxin Gao, Tian-Ao Ren, Jiewen Lai, Hongliang Ren IEEE International Conference on Advanced Robotics and Its Social Impacts (ARSO), 2024 Paper TL;DR: A model-free deep reinforcement learning controller enables tendon-driven flexible endoscopes to autonomously navigate in both free space and contact-rich environments, achieving over 90% success within clinical accuracy by retraining policies learned in free space for contact scenarios. |

|

Guankun Wang, Tian-Ao Ren, Jiewen Lai, Long Bai, and Hongliang Ren Medical & Biological Engineering & Computing(MBEC), 2023 Paper TL;DR: A domain-adaptive Sim-to-Real framework combining IoU-guided image blending and style transfer enables accurate and stable segmentation of oropharyngeal organs from synthetic data, significantly improving real-world performance for robotic intubation despite limited real images. |

|

Jiewen Lai, Tian-Ao Ren*(Co-first author), Wenchao Yue, Shijian Su, Jason Chan, Hongliang Ren IEEE Transaction on Industrial Informatics(T-II), 2023 Supply Video / Paper TL;DR: Transferring the navigation strategy that a redundant soft robot learns from what it has seen in the SOFA-based virtual world to the real world. |

|

Reviewer for IROS 2024; ICRA 2024/2025/2026; IEEE Transactions on Medical Robotics and Bionics (T-MRB); IEEE Transactions on Industrial Informatics (T-II)

|

|

Course Assistant in ME 327: Design and Control of Haptic Systems, Stanford University, Spring 2025 Mentorship: Jorge Garcia(M.S. at Stanford) |

|

Best Paper Award, ROBIO 2025 Best Poster Award, ICRA Workshop 2023 Outstanding Graduates in Beijing, 2023 National Scholarship of China, 2022 |

|

|